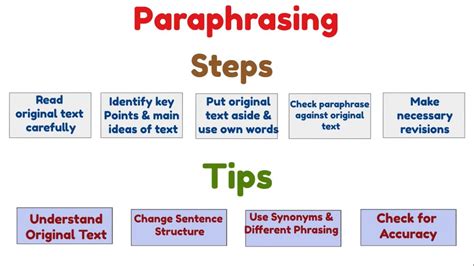

5 Steps to Scrap

Introduction to Scraping

Scraping, also known as web scraping, is the process of automatically extracting data from websites, web pages, and online documents. This technique is widely used for various purposes such as data mining, monitoring, and research. With the increasing amount of data available online, scraping has become an essential tool for businesses, researchers, and individuals to gather and analyze data. In this article, we will discuss the 5 steps to scrap data from websites.

Step 1: Inspect the Website

The first step in scraping data is to inspect the website from which you want to extract data. This involves analyzing the website’s structure, HTML tags, and JavaScript code to identify the data you want to scrape. You can use the developer tools in your web browser to inspect the website’s elements and understand how the data is structured. It’s essential to check if the website has any robots.txt file or terms of service that prohibit web scraping.

Step 2: Choose a Web Scraping Tool

There are various web scraping tools available, ranging from simple command-line tools to complex visual scraping tools. The choice of tool depends on your programming skills, the complexity of the website, and the type of data you want to scrape. Some popular web scraping tools include Beautiful Soup, Scrapy, and Selenium. You can choose a tool that suits your needs and skill level.

Step 3: Send an HTTP Request

The next step is to send an HTTP request to the website to retrieve the data. This can be done using the web scraping tool you have chosen. The HTTP request should include the URL of the webpage, headers, and any other required parameters. You can also specify the type of data you want to retrieve, such as HTML, JSON, or CSV.

Step 4: Parse the HTML Content

After sending the HTTP request, you will receive the HTML content of the webpage. The next step is to parse the HTML content to extract the data you want. This can be done using HTML parsing libraries such as Beautiful Soup or lxml. You can use these libraries to navigate the HTML elements, extract the data, and store it in a structured format.

Step 5: Store the Data

The final step is to store the scraped data in a format that can be easily analyzed and used. This can be done using databases such as MySQL or MongoDB, or by storing the data in a CSV file or JSON file. It’s essential to ensure that the data is stored in a structured format to make it easy to analyze and use.

💡 Note: Always check the website's terms of service and robots.txt file before scraping data to ensure that you are not violating any rules.

Here is a sample table showing the tools used for web scraping:

| Tool | Description |

|---|---|

| Beautiful Soup | HTML parsing library |

| Scrapy | Web scraping framework |

| Selenium | Browser automation tool |

To summarize, the 5 steps to scrap data from websites are inspecting the website, choosing a web scraping tool, sending an HTTP request, parsing the HTML content, and storing the data. By following these steps, you can extract data from websites and use it for various purposes.

What is web scraping?

+

Web scraping is the process of automatically extracting data from websites, web pages, and online documents.

What are the benefits of web scraping?

+

The benefits of web scraping include data mining, monitoring, and research. It can also help businesses to gather and analyze data.

Is web scraping legal?

+

Web scraping is legal as long as you are not violating the website’s terms of service and robots.txt file. It’s essential to check these before scraping data.